Excel’s Power Query (or Get & Transform since Excel 2016) is a great tool for building queries to get data from the web. Within a couple of minutes you can build a query that will pull data from a webpage and transform it into the desired format. This is great for getting data from a webpage that is updated frequently as you will be able easily refresh your query to pull the new data.

Remember, if you’re not using Excel 2016 or later, then you’ll need to install the power query add-in.

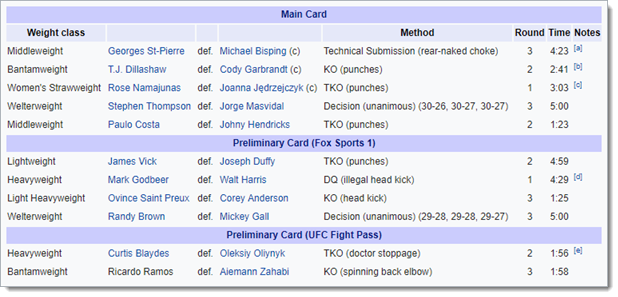

Data to Extract

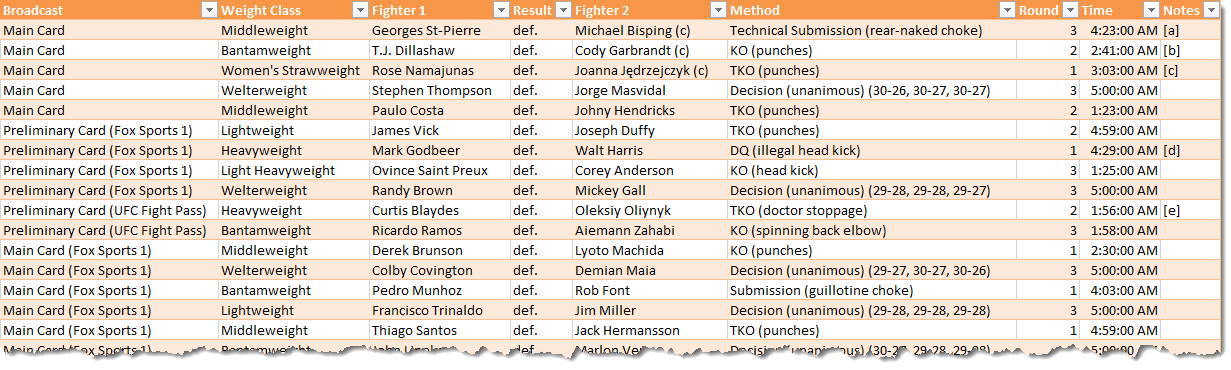

In this post we’re going to take a look at how we can pull data from a series of similar pages. I’m a big MMA fan, so the example we’re going to look at is getting a list of all UFC results from Wikipedia.

If you visit the Wikipedia page for UFC events there’s a table of Past Events. If you click on one of the events you’ll see a results table. If you look at a few more events, you’ll notice the structure is the exact same and they all have a results table. This is the data I want to get, but from all 400+ events listed in the past event section. If the number of pages was any larger, you might be better off using another tool like Python, but we’re going to be using Power Query.

Create a Query Function

First, we will create a query to extract the data on one page. We will then turn this into a function query where the input is an event page URL. This way we can apply the query to each URL in a list of all the URL’s.

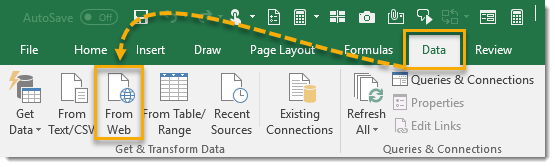

Head to the Data tab in the ribbon and press the From Web button under the Get & Transform section. If you’re working with Excel 2013 or earlier via the add-in, then this will be found under the Power Query tab.

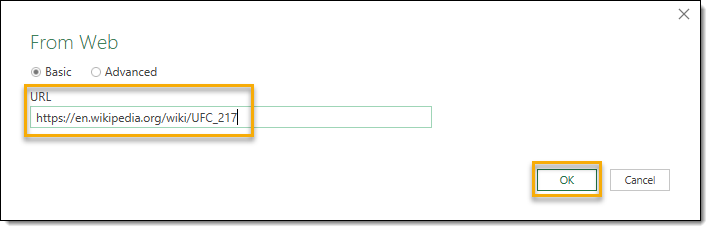

Enter the URL and press the Ok button.

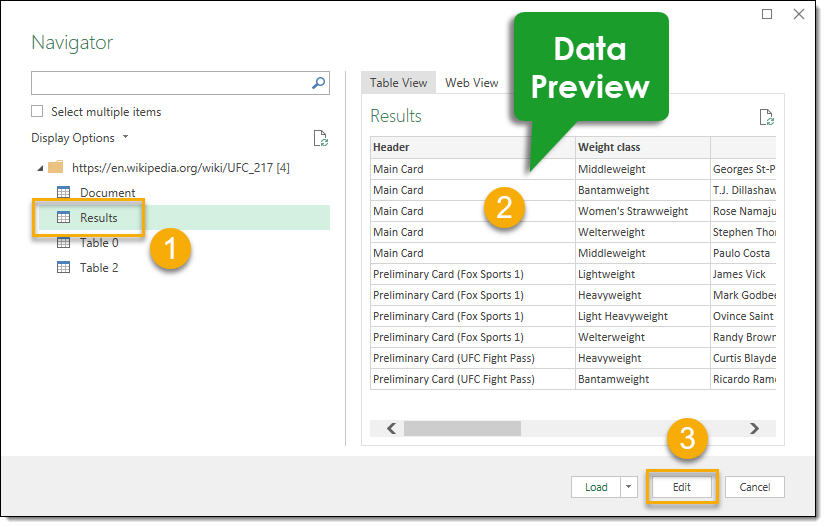

Excel will connect with the page and the Navigator dialog box will open.

- A list of tables available to import from the webpage will be listed. Select the Results table.

- A preview of our selected data will appear.

- Press the Edit button. This will open the Query Editor window.

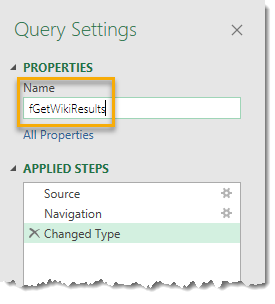

Rename the query to fGetWikiResults. This will be the name we call to use our query function later on.

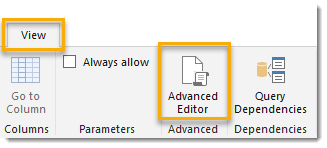

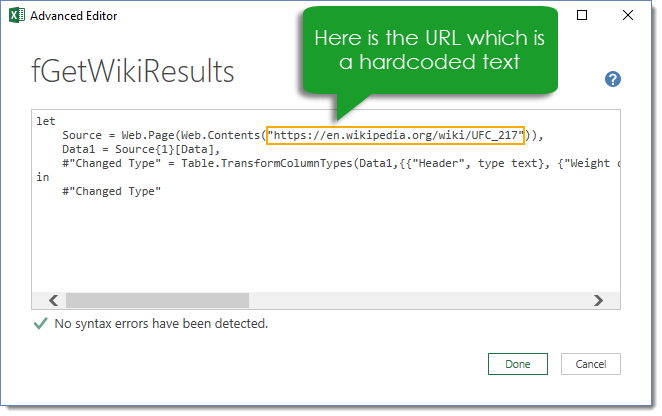

Now we can edit our query to turn it into a query function. Go to the View tab and press the Advanced Editor button. This will allow us to edit the code that Excel has created to extract the data from this URL.

We will need to edit this code to the following. The parts that need to be added/changed are highlighted in red.

let GetResults=(URL) =>

let

Source = Web.Page(Web.Contents(URL)),

Data1 = Source{1}[Data],

#"Changed Type" = Table.TransformColumnTypes(Data1,{{"Header", type text}, {"Weight class", type text}, {"", type text}, {"2", type text}, {"3", type text}, {"Method", type text}, {"Round", Int64.Type}, {"Time", type time}, {"Notes", type text}})

in

#"Changed Type"

in GetResultsPress the Done button when finished editing the query. This will turn our query into a parametrized query with the URL as an input.

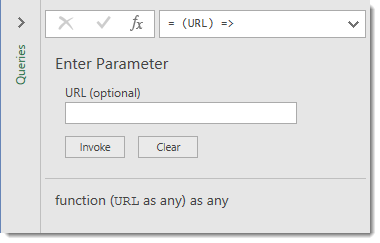

You should see the data preview in the query editor has been replaced with a parameter input. We don’t need to enter anything here and we can just leave it blank.

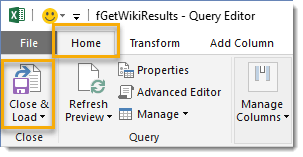

We can then save our query function by going to the Home tab and pressing the Close & Load button.

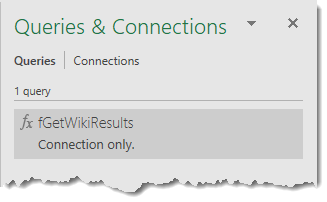

You should now see the fGetWikiResults query function in the Queries & Connections window.

Get a List of URL’s

Now we will need to get our list of event page URL’s from the Past Events page. We could use power query to import this table but this would just pull in the text and not the underlying hyperlink. The best way to get the list of URL’s is to parse the source code from the page. You can view any webpage’s source code by pressing Ctrl + U from the Chrome browser.

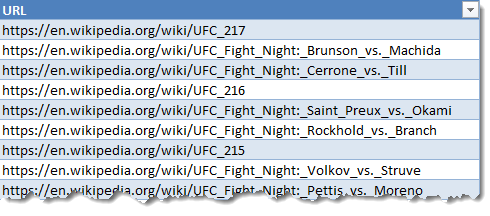

You’ll need to be fairly familiar with HTML to find what you’re looking for. The first couple lines of HTML we are interested in looks like this. I have highlighted the hyperlinks we’re interested in to demonstrate where they are. You can parse these out in another Excel workbook using some filters and basic text formula. We will also need to concatenate the starting part of the address (ie. https://en.wikipedia.org/wiki/UFC_217).

<tr>

<td>416</td>

<td><a href="/wiki/UFC_217" title="UFC 217">UFC 217: Bisping vs. St-Pierre</a></td>

<td><span class="sortkey" style="display:none;speak:none">000000002017-11-04-0000</span><span style="white-space:nowrap">Nov 4, 2017</span></td>

<td><a href="/wiki/Madison_Square_Garden" title="Madison Square Garden">Madison Square Garden</a></td>

<td><a href="/wiki/New_York_City,_New_York" class="mw-redirect" title="New York City, New York">New York City, New York</a>, U.S.</td>

<td><span style="display:none" class="sortkey">7004182010000000000</span>18,201<sup id="cite_ref-21" class="reference"><a href="#cite_note-21">[21]</a></sup></td>

</tr>

<tr>

<td>415</td>

<td><a href="/wiki/UFC_Fight_Night:_Brunson_vs._Machida" title="UFC Fight Night: Brunson vs. Machida">UFC Fight Night: Brunson vs. Machida</a></td>

<td><span class="sortkey" style="display:none;speak:none">000000002017-10-28-0000</span><span style="white-space:nowrap">Oct 28, 2017</span></td>

<td><a href="/wiki/Gin%C3%A1sio_do_Ibirapuera" title="Ginásio do Ibirapuera">Ginásio do Ibirapuera</a></td>

<td><a href="/wiki/S%C3%A3o_Paulo" title="São Paulo">São Paulo</a>, Brazil</td>

<td><span style="display:none" class="sortkey">7004102650000000000</span>10,265<sup id="cite_ref-22" class="reference"><a href="#cite_note-22">[22]</a></sup></td>

</tr>Once we have the full list of event URL’s, we can turn the list into an Excel Table using the Ctrl + T keyboard shortcut and name it URL_List.

Use the Query Function on our URL List

We are now ready to use the fGetWikiResults query function on our list of event URL’s.

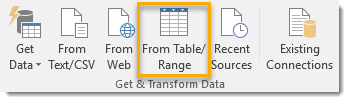

Create a query based on the URL_List table. Select a cell in the table and go to the Data tab in the ribbon and press the From Table/Range button under the Get & Transform section.

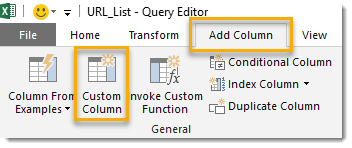

Now we will add a custom column to the query. This is where we’ll invoke our fGetWikiResults query function. Go to the Add Column tab and press the Custom Column button.

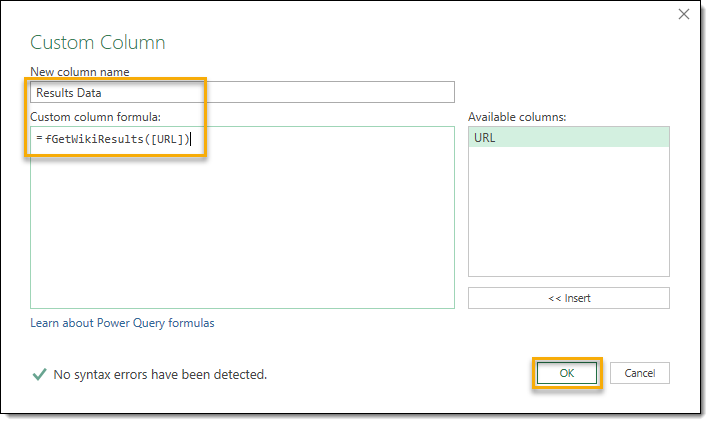

Add a New column name to the custom column and then add the Custom column formula fGetWikiResults([URL]).

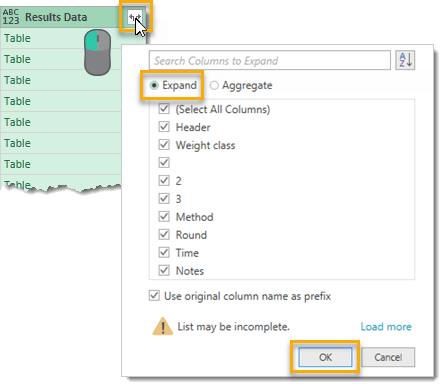

The new custom column will contain a Table for each URL and we will need to expand this table to see the results. Left click on the Results Data column filter icon seen in the column heading. Select Expand from the menu and press the OK button.

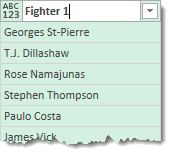

Some of the column headings were missing in our source data, so we can rename them. Double left click on the column heading to rename it.

We can now Close & Load the query and the results data will load into a new sheet. This will take a good few minutes so be patient. This is why you should probably start considering Python or similar tools if you have any more pages than this example.

👉 Find out more about our Advanced Formulas course!

👉 Find out more about our Advanced Formulas course!

this worked great. One thing though; it seems that if one of the websites is not available, the query stops processing. the remaining URLS are not processed. what’s the M code to make it record no data for the unreachable URL and go onto the next until finished?

Hmm, I hadn’t considered this.

When setting up your From Web query, there is an Advanced option. Within the Advanced option you can set a Command timeout. I haven’t tried it out, but I think this will do the trick.

I entered 2 minutes and it created this line of M code:

Source = Web.Page(Web.Contents("https://en.wikipedia.org/wiki/UFC_217", [Timeout=#duration(0, 0, 2, 0)]))I suspect that something like

[Timeout=#duration(0, 0, 0, 30)]would do a shorter timeout of 30 seconds.Let me know if it works!

How can this be automated, by VBA and clicking a button?

Excellent work it saved a lot of time. However, I realized that it only works for websites that has a one-row table. Otherwise, it gives an error. is there a way to make it export those with multiple rows as well?

Thanks

Hmm, not sure what you mean by one row. The example in the post has multiple rows and works fine. What is the site you are trying?

Just remember this will only work for table HTML tags in the proper structure. See example.

This worked really well for but I was initially getting errors – the URL list needs to have the title URL

It doesn’t have to be named URL, but when creating the custom column, you need to use fGetWikiResults([Your Column Name]) instead of fGetWikiResults([URL]).

thanks, I’m getting “cannot convert null to type text”, do you know how to mitigate this? many thanks

actually I think I’ve figured it out, its a blank row it cant cope with

Yes, that would likely throw an error.

wow

cool trick

you saved my time today! thanks

thanks is usefull

thanks for article

Hi, I tried your example and it worked fine, but I have a common table name “Table 0” that has different column names for each URL. Is there some code I can put in to ignore the column headings? So in the text below the columns are named:

Aston Villa results, Aston Villa Results 2, Aston Villa Results 3, etc. Then for the next URL the column headings change to something like Leeds United results, Leeds United Results 2, Leeds United Results 3, etc.

let

Source = Web.Page(Web.Contents(“https://www.soccerstats.com/team.asp?league=england2&stats=24-aston-villa”)),

Data0 = Source{0}[Data],

#”Promoted Headers” = Table.PromoteHeaders(Data0, [PromoteAllScalars=true]),

#”Changed Type” = Table.TransformColumnTypes(#”Promoted Headers”,{{“Aston Villa results”, type date}, {“Aston Villa results_1”, type text}, {“Aston Villa results_2”, type text}, {“Aston Villa results_3”, type text}, {“Aston Villa results_4”, type text}, {“Aston Villa results_5”, type text}, {“Aston Villa results_6”, type text}, {“Aston Villa results_7″, type text}})

in

#”Changed Type”

Great if you can help.

Cheers,

John

Sorry for the delay! Good question.

I would remove the two steps that promote headers and change the data type, so that you have this:

letSource = Web.Page(Web.Contents("https://www.soccerstats.com/team.asp?league=england2&stats=24-aston-villa")),

Data0 = Source{0}[Data]

in

Data0

This way all the columns will have the generic names Column1, Column2 etc…

Then when everything is combined, filter out the rows of data that contain the Aston Villa results, Leeds United Results etc… Then change data types and rename columns appropriately.

Hi! I am trying to download data but get the following error “Token Identifier expected”. Whats wrong?

let GetName=(“https://www.spordiregister.ee/en/organisatsioon/list?spordiala_id=83&esrliik=EOK%2CKL%2CSAL%2CSY%2CRL”) =>

let

Source = Web.Page(Web.Contents(“https://www.spordiregister.ee/en/organisatsioon/list?spordiala_id=83&esrliik=EOK%2CKL%2CSAL%2CSY%2CRL”)),

Data0 = Source{0}[Data],

#”Changed Type” = Table.TransformColumnTypes(Data0,{{“Name”, type text}, {“Reg.no”, Int64.Type}, {“Municipality”, type text}, {“Activities”, type text}, {“Sport”, Int64.Type}})

in

#”Changed Type”

in GetName

This M code is expecting a parameter input but there isn’t any. This post is meant for importing multiple pages. It sets up a function with a parameter for the URL’s of the multiple pages. It looks like you only need to import one page, so this isn’t what you need.

Just use the Data > From Web and paste in your URL. That’s what you’re looking for.

If i want change the page number or any other website parameter for which data is variables, means sometimes date for the parameters are available and sometimes not. When i run query with all web address included, i am getting error. Is there any way to keep the variable inputs in the URL list and power query skips if no data available on website.

xxxxxxxxxxxxxx&pageNum=3&pageSize=100&filter=status%3AOpen

xxxxxxxxxxxxxx&pageNum=3&pageSize=100&filter=status%3AClosed

xxxxxxxxxxxxxx&pageNum=3&pageSize=100&filter=status%3AWIP

Hi Radha, in the custom column step use this formula instead.

try fGetWikiResults([URL]) otherwise nullThis was awesome! Any chance you can do the same tutorial for individual fighters wikis? And particularly grabbing the data from each fighter’s info box (which is also html table) on their wiki page? I tried to apply the same logic you’ve described above to that task but can’t seem to avoid errors in that task

It would be the same process as this tutorial.

Dear John,

Thanks a lot for your help with this tutorial !

I would like to know if there’s a way to catch the hyperlink of a picture when using your function ?

There’s a website from where i copy some tables but in the tables, there are some pictures pointing to useful stuff. Is there a way to catch the link ?

Thanks by advance for your help

Vincent

Unfortunately, there’s no way to get any links with power query yet.

This is a great solution! Thank you for this. However, I’m running into an issue. I’m querying weather data that includes a column for the time stamp: “Time (PST)”. However, this column name changes depending on whether or not daylight savings is in effect, so the column names switch between “Time (PST)” and “Time (PDT)”. Because of this name switching, Excel runs into an error and stops downloading from the URLs. I’ve tried to solve this by batching this process between daylight savings dates and non-daylight saving dates. But I’m wondering if there’s a way to do it all in one go.

Thanks,

Brenda

Check out this video for dealing with changing column names:

https://www.youtube.com/watch?v=LtVr0r0ld9U

Thanks

Will this work Microsoft power bi?

Yep

Hi John,

Great tutorial! I have run in to one road block and was hoping you could provide some assistance. I have a field that lists zip codes and another field that concatenates the url + zip. When I run this query everything works great until it hits a zip where the website contains no information for it. If zip 12345 (www.url.com/12345) has data, then it collects the information from the table I’ve selected on the site. If zip 67890 (www.url.com/67890) does not contain that table, then that row gets populated with “Error” and stops there. How can I incorporate some sort of error handling to state that if no table data is present for 67890, proceed to the next row?

Thanks!

Wrap it in try… otherwise…

Hi,

I get the error: “cannot convert null to type text”

Please could you assist

I think you need to make sure you’re not using any blank rows of data.

Thanks for the great information. I was wondering if you could help with a javascript heavy site like Quora.com. I get about 100 js errors trying to load the page and then can’t select any content for the query. If I try to download the entire page, I just get the message to enable JS and reload.

Thanks in advance for your help.

Unfortunately, I think you’re out of luck.